How to Train Vision Transformer on Small-scale Datasets?

Feb 1, 2022· ,·

0 min read

,·

0 min read

Hanan Gani

Muzammal Naseer

Mohammad Yaqub

Abstract

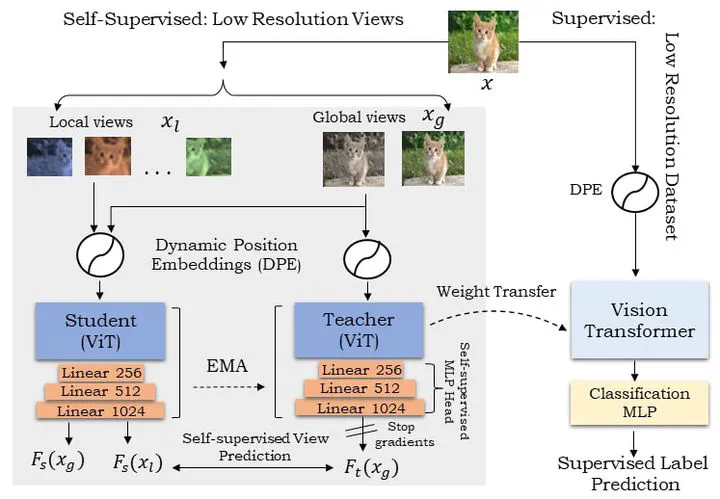

Vision Transformer (ViT), a radically different architecture than convolutional neural networks offers multiple advantages including design simplicity, robustness and state-of-the-art performance on many vision tasks. However, in contrast to convolutional neural networks, Vision Transformer lacks inherent inductive biases. Therefore, successful training of such models is mainly attributed to pre-training on large-scale datasets such as ImageNet with 1.2M or JFT with 300M images. This hinders the direct adaption of Vision Transformer for small-scale datasets. In this work, we show that self-supervised inductive biases can be learned directly from small-scale datasets and serve as an effective weight initialization scheme for fine-tuning.

Type

Publication

In * British Machine Vision Conference, BMVC 2022*